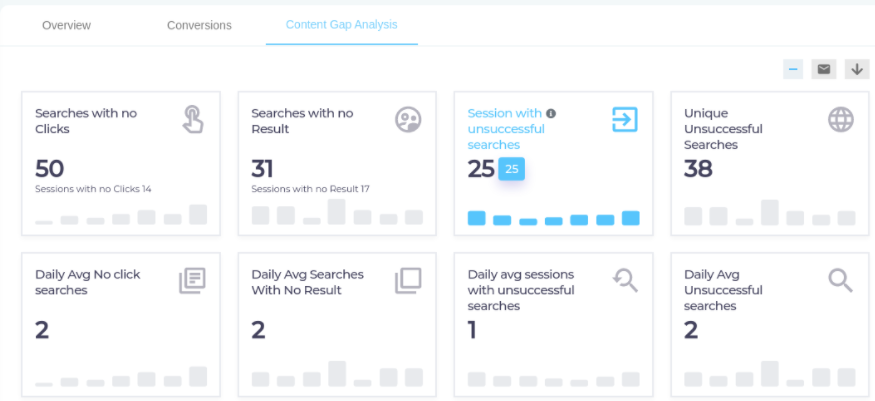

Content Gap Analysis

The top report in the Content Tab Analytics tab is Tiles, which captures eight metrics:

-

Searches with No Clicks

-

Searches with No Result

-

Session with Unsuccessful Searches

-

Unique Unsuccessful Searches

-

Daily Avg No Click Searches

-

Daily Avg Searches with No Result

-

Daily Avg Sessions with Unsuccessful Searches

-

Daily Avg Unsuccessful Searches

Each metric has its own tile where you can view approximate numbers, such as Unique Successful Searches: 11K and Searches with No Result: 7K. On hovering over a tile, the exact numbers appear, such as Unique Successful Searches: 11232 and Searches with No Result 7321.

Tiles

Searches with No Clicks

The total number of searches in which user doesn't click on any result. The only scenario in which a no-click search occurs is when a user finds results in response to a query but doesn't click on any result.

A second metric on the tile is Sessions with No Click. It is the total number of sessions in which there was at least one search with no click.

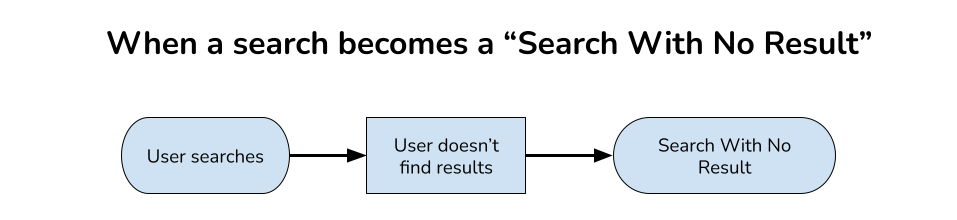

Searches with No Result

The total number of searches for which no result is returned.

A search can be no-result for multiple reasons:

- Documents with the searched queries do not exist in your data repositories.

- The searcher does not have the permission to see documents.

- The search client is not connected with the content source where the documents are stored.

Based on this information, content managers can select the best course of action to plug content gaps: Create new articles or educate users about advanced search.

A second metric on the tile is Sessions with No Result. It is the total number of sessions in which there was at least one search with no result.

Session with Unsuccessful Searches

This counts the number of sessions where at least one unsuccessful search was recorded. Both Searches with No Result and Searches with No Click are Unsuccessful Searches.

Unique Unsuccessful Searches

The number of total unsuccessful searches. A search is unsuccessful in two scenarios:

- User searches and doesn't find any results (Searches with No Result)

- User searches, finds results, but doesn't click on any result (Searches with No Click)

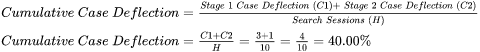

Daily Average Searches with No Click

It is the average of Searches with No Click over the selected Date Range.

Example.

If the Date Range is from 2020-01-10 to 2020-02-09, then

Daily Average Searches with No Result

It is the average of Searches with No Result over the selected Date Range.

Example.

If the Date Range is from 2020-01-10 to 2020-02-09, then

Daily Average Sessions with Unsuccessful Searches

It is the average of Sessions with Unsuccessful Searches over the selected Date Range.

Example.

If the Date Range is from 2020-01-10 to 2020-02-09, then

Daily Average Unsuccessful Searches

It is the average of Unsuccessful Searches over the selected Date Range.

EXAMPLE.

If the Date Range is from 2020-01-10 to 2020-02-09, then

Other Reports in Content Gap Analysis

- Unsuccessful Searches. This graph shows searches with no results and no clicks in a selected time period. Helps to track keywords that do not generate any search result.

- Sessions with Unsuccessful Searches. This bar graph compares the number of unsuccessful sessions on a weekly basis.

- Searches with No Click. Lists queries for which users didn't click on any result.

- Searches with No Result. Lists queries for which users didn't find any result.

- High Conversion Results Not On Page One. Shows frequently-clicked results not on page one.

- Average Time on Documents. This report captures the average time spent on the documents by the users across sessions.

Download or Share

Check out Download and Share an Analytics Report